July 21,2024

Building an Interview Preparation Assistant with LangChain and Ollama

In the ever-evolving world of technology, preparing for interviews can be a hard task. To help the preparation process, I’ve designed an Interview Preparation Assistant using LangChain and the Ollama model. This tool is engineered to generate technical questions and their corresponding answers based on a given topic and difficulty level. Here's a detailed look at how this application is developed and how it can benefit users.

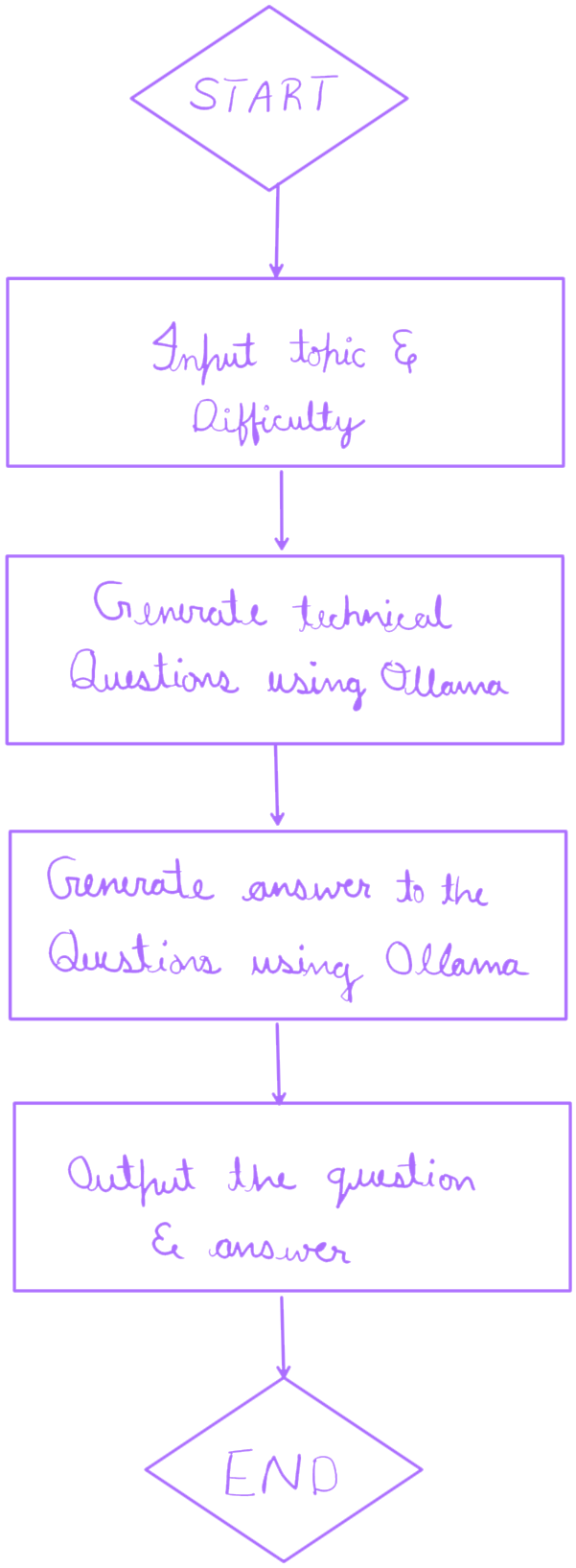

FLOW :

DEMO :

CONCEPT :

The Interview Preparation Assistant aims to provide candidates with technical questions and concise answers tailored to their interview preparation needs. By leveraging LangChain’s powerful chain-based structure and the Ollama model's language capabilities, this tool ensures that users receive high-quality and contextually accurate content.

CORE COMPONENTS :

LangChain is a robust framework for building applications powered by language models. It allows for the creation of complex workflows and chains by combining multiple language model components, such as prompts and chains, to achieve desired outputs.

Ollama Model Here, Ollama’s Qwen2:0.5b is employed due to its advanced natural language processing capabilities; this model has the ability to create specific and very pertinent responses for technical interviews.

HOW IT WORKS :

The assistant uses a two-step process to generate interview preparation content:

-

1. Generating the Technical Question:

- To create a question based on the given topic and difficulty level, there is an existing promptTemplate.

- LLMChain processes this template with the Ollama model so as to give out one accurate technical question only.

-

2. Generating the Answer:

- A different PromptTemplate gives a brief response to that earlier question.

- This stage of work also involves LLMChain in order to ensure accuracy and how directly it deals with the matter asked.

These two chains are combined through SequentialChain so that both question and answer can be generated in sequence.